Google came out with two intriguing pieces of hardware this week, both of which represent techtonic shifts in the industry. I don't normally talk much on gadgets, but these may have huge implications for how we interact with data.

Let me start with the high end Google Chromebook. Google made the jump to the Chromebook in 2012, ChromeOS based operating system that melded tablet and touchsense with a keyboard, pricing it at $250. This has effectively set a low end that is competitive with most tablets, while still providing a keypad. Chrome OS system is not Android, Google's other operating system and the one most heavily used for mobile devices and tablets, but increasingly represents where Google is moving towards - utilizing a JavaScript based HTML5 environment that nonetheless has access to key sensors, localized data stores and services. The Chromebook Pixel, announced this week, is in many respects simply a higher quality Chromebook, with a reinforced metal casing, more internal space and a faster processor, but it does not deviate significantly from Google's belief that it does not need to be running Windows or Apple's iOS - and that it can survive quite well, thank you, in the higher end notebooks where accessibility to specialized tools (Adobe Photoshop being a prime example) is often a major deciding factor.

This is a big assumption, but I suspect that it is also the correct one. Google has created an ecosystem for young developers and companies to establish themselves in an emerging market, rather than trying to compete against multi-billion dollar companies that are already well established in the Windows world, and in the process awakened an interest in re-imagining those products without the constraints of sunk costs and million line code bases. Give them a more powerful set of CPUs and GPUs than exists at the low end, an architecture that abstracts away a lot of different hardware differences, and a hungry market, and these developers will likely create the next Photoshop, or Office, or whatever, one that takes advantage of the web and cloud computing (parallel virtualized servers) to do the heavy lifting.

3D graphics are a prime example of this. Most 3D suites in the PC world have two modes - a modeling mode where the artist sets up the initial meshes with low resolution shading (something that can be readily done with a decent GPU) and then a render phase that does the calculations to provide much higher resolution images with various light vector analysis and filtering. This latter phase is eminently suited for cloud computing, and if such was built in from the start, those renderings could be done relatively seamlessly, with changes in state in the model being transmitted directly to the server. In this way processor intensive applications offload most of that cost to external dedicated systems more or less in real time.

There is, of course, always the question of connectivity. If you do not have Internet connectivity, then you can't do that processing. Again, I'd question whether that is all that significant an issue today, and whether this again is simply a lack of recognition about the power of decoupling. Most applications today either are connectivity apps of some sort anyway, or they are apps that have the above split between pre-process work and in-process work. I can write a blog post or a chapter right now within Chrome and save that information to local storage if there is no Internet, then transmit those updates as a background process to the server. I can write code and even have it debugged on a local device, but have it actually run on an instance in the cloud. In some respects, we're returning to a batch processing model of yore, but whereas in the 1960s and 70s such batch processing was with code, in the '10s the "coding" IS the visible application and the batching is the updating or heavy processing that's now done with virtual instances.

Given this, a channel friendly, high margin laptop based on Chrome is not really that hard a pill to take. It's audience is going to be the same people who put down $1500 for a "game machine" - developers, gamers looking for an experience in a new medium, people who push their systems to the limit. Distribution channels like these both because the margins on these are usually pretty good, and because the core audience are people who do not have any qualms a couple of thousand dollars for a "work" machine. These will be the people writing the new software for it, and given the explosion of app developers and the various Android platforms to date, they will see this as a tremendous new opportunity.

It's also a shot just below the waterline for Microsoft, which is having difficulty in getting traction for either Windows 8 or Surface, its own hardware play in the tablet space. Part of this has to do with the fact that it is easy to dismiss mobile apps as "toys" - so Microsoft's approach was to essentially port the Windows DNA into a tablet device. The problem is that the Windows DNA was developed mostly before the rise of cloud computing and mobile devices, and consequently still seeks to put as much of the computing onus on the desktop or laptop system as possible, rather than truly embracing a hybrid strategy. This means that those developing Windows 8 platform apps for mobile will be trying to do this conversion from already existing application bases, which in turn will mean longer time to market even as Android app delivery times shrink.

For Apple, this could be a problem as well, though it could also be an opportunity. Apple really has comparatively little difficulty in being #2 in a market. It's less expensive to defend, you tend to build up a loyalist base that the #1 often doesn't have because they are trying a one-sized fits all strategy, and when there are ebb times for the company (as I believe there are now as it goes through a transition from Steve Jobs to Tim Cook) it provides a chance for the company to reorganize, and to be the "innovation leader". Apple will likely end up in a similar position to Google in the mobile space that it had for years to Microsoft in the PC world. (Yes, Apple is currently still #1 in tablets, but I do not expect that position to last beyond 2013). Given that Google is likely not making that much money selling hardware (and really can't, given it's Android licensing strategy) Apple can actually take advantage of Google's presence to keep Microsoft locked out of the mobile/pad/tablet space while making more money on hardware sales.

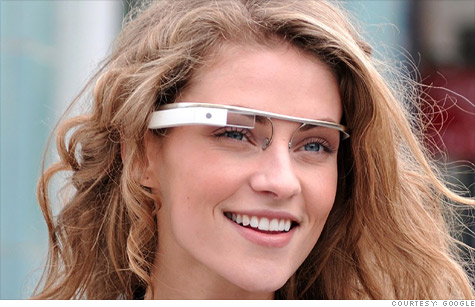

If Google's move with Pixel was largely strategic in establishing a new baseline platform for developers, it's announcement of Google Glass was both surprisingly quiet and far reaching. I have, in previous columns and elsewhere, talked about what a game change a glasses display really is. I believe in the long term they will be far more transformative than even the iPhone, and possibly the PC itself.

I think that the first ones will likely be fairly limited in terms of functionality, if what I see with Google Glass is any indication, but even those functions are impressive - the ability to take photos and videos of what you are seeing, anywhere, anytime, without needing to carry around a camera (or a phone) by itself makes this a radical innovation. The ability to search for information at a moment's notice, either through voice commands or via QCodes or bar codes, makes them indispensible aids for everything from recognizing a person's face or getting detailed information about them while in conversation to doing real time operations (from repairing a car to open heart surgery) with informatics supplied in real time. Alerts can set up reminders, opens up the way for real time conferencing and chat, would make translations on the fly feasible without having to constantly consult the mobile phone box.

This doesn't even begin to touch the broader data access and data collection capabilities - again, those capabilities that arise when you use the glasses as a peripheral and let the real processing take place on the cloud. Consider for instance an autoread capability. You look at a printed document (a book, manual or newspaper, fo instance) quickly, taking photos on the fly as you scan through it. Each photo gets sent to a service, is OCR'd, the text is then collected in a queue along with the associated records (with indexed links back to the images), and later you can then read the text output or have a text-to-speech application (again located on the server) read the document back to you.

You go to a meeting, one person white boards a business plan or software architecture, you capture both video and stills, convert the layout into skeletal graphics that can then be turned into finished graphics later on in a more capable environment, build a report that can then be referenced in the next meeting or for other audiences.

With geolocation and network proximity capabilities added into the mix. I'm thinking that the keyhole markup language (KML), the markup language for Google Earth and Google Maps, will play a big part here. Subscribing to a KML channel will let you download feeds in which proximal triggers - being within a certain designated area or within proximity to a given placemark would display messages - text, image, video, audio - that would be relevant to that area, with closer placemarks being higher resolution and farther placemarks being fuzzier and fainter. Orientation also plays a factor - audio is louder when you are facing it, and fades away as you move away. This would make it possible to create geo-information tours, seeing images and annotations as you come into their proximity. Some of this may be of interest to history and art buffs, but I see its bigger use being a way to catalog resources, building uses, scheduled events and so forth (as well as for geo-based gaming, though the technology will likely have to improve somewhat).

I suspect that this with barcodes or qcodes will change the retail experience beyond all recognition. Even with bar code readers, you can walk into a grocery store, pick up an object, look at its bar code to scan it and put it in your basket. Pricing signage and labeling becomes obsolete. When you get to the door (or at any time, for that matter) you can assent to the purchase of all items in the queue, the items are charged against your account after coupons are applied (which can be scanned asynchronously beforehand) and you leave. You may still have baggers, but cash registers become increasingly anachronistic. Fast food restaurants and drive-throughs works in similar ways - you can see what's available, order it, purchase it and have it waiting, along with the requisite upsell items suggested, all without ever waiting in a line.

Combine this with RFID chips on smart packages, and not only do you effectively know where everything is, from the shoes you bought to the loaf of bread, but you can also track inventory levels and spoilage, and can even tell whether or not the dishes in the dishwasher are clean or dirty. RFID badges at conferences allow you to know when events are scheduled, where they are (and how to get there), who you are talking to (and logging that person for later retrieval of their data, from their bio to the panels they are speaking at to the papers that they wrote. Because glasses themselves have wireless communication, they also can effectively double as badges themselves.

Such glasses will actually be the death knell of the smart phone, though not of the handheld. Indeed, for a while I suspect that the handheld and the tablet will in fact be the equivalent of a mid-tier server for smart phones, and there are form factors for which tablets of various sizes make far more sense than glasses. However, telecommunications won't be one of these, if only because palm-sized devices generally must be used as phonesets for voice communication, which in turn means they can't be used as computers.

On the other hand, it's not hard to imagine a blue tooth adapter that would automatically connect to glasses when you get in your car or truck, turning the glasses into a heads-up-display. This is part of the broader evolution of the car itself into a mobile platform that would not only contain "traditional" information such as velocity, fuel gauges and RPMs but would also would be able to give you the ability to plug into forward, side, and rear mounted cameras, get diagnostics information about the car itself (tire pressures, engine heat, electrical system integrity) and provide navigational assistance and dynamic maps. It also ties into security - the car won't start or even open its doors without relevant password keys and the physical proximity of the glasses, though it can be opened from an app with the relevant keys, for those cases where you lock your glasses in the car.

Additionally, (though this doesn't apply to Google Glass yet, since it is essentially a mounted monocle at the moment) three dimensional visualization becomes truly possible if you have two such glasses set up for parallax viewing or recording. This isn't just a neat visual effect, by the way - parallax cameras mounted on a car give the car's processor depth perception which can be augmented with radar or laser systems. In effect, the glasses can be used with external rigs (such as a car) to allow switching between normal vision, radar, night vision, IR and eventually modeled vision.

One final point - I watch my two teenage daughters interact with their peers through screen based media, and expect full well that this will carry over into the "comps" (computer glasses) realm, though not necessarily in ways that might be expected. My suspicion is that at least for a while, glasses will augment handhelds, and these will still be the primary means for entering text data. The next logical device is a glove, which can be used with virtual keyboards. Texting has an advantage over audio in a number of ways for asynchronous communication, and even with visual and audio sharing, I do not see text input withering away.

The point with all of these is that "comps" - computer glasses - provide the entry point into augmented reality. They hit the two big sensory input areas - sight and sound - and combine them with proximity and time-based information, access to cloud based services and social media streams. The app space potential for this medium is also huge, as the form factor involved makes all kinds of apps feasible that were at best awkward and at worst unthinkable with other interfaces.

Short term, I think Google Glass should be in huge demand among the early adopter crowd, even though I believe that the interfaces I've seen so far are primitive compared to where they will be in 3-5 years. However, this is also a good time to become familiar with the form factor, as I suspect it will be a radical shift from the way things are now. The biggest challenge I had in writing this article was in NOT coming up with all kinds of novel use cases for them, as I could spend days doing so.

Kurt Cagle is an author and information architect for Avalon Consulting, LLC. His latest book HTML5 Graphics with SVG and CSS3, will be available from O'Reilly Media soon.

All well and good – we can't stop progress - but (needless to say to someone like you) this has serious implications for ALL of our fourth amendment right to privacy. *sigh* I suppose if we're going to have a police state is might as well be "of the people, by the people, for the people.

ReplyDelete