Google came out with two intriguing pieces of hardware this week, both of which represent techtonic shifts in the industry. I don't normally talk much on gadgets, but these may have huge implications for how we interact with data.

Let me start with the high end Google Chromebook. Google made the jump to the Chromebook in 2012, ChromeOS based operating system that melded tablet and touchsense with a keyboard, pricing it at $250. This has effectively set a low end that is competitive with most tablets, while still providing a keypad. Chrome OS system is not Android, Google's other operating system and the one most heavily used for mobile devices and tablets, but increasingly represents where Google is moving towards - utilizing a JavaScript based HTML5 environment that nonetheless has access to key sensors, localized data stores and services. The Chromebook Pixel, announced this week, is in many respects simply a higher quality Chromebook, with a reinforced metal casing, more internal space and a faster processor, but it does not deviate significantly from Google's belief that it does not need to be running Windows or Apple's iOS - and that it can survive quite well, thank you, in the higher end notebooks where accessibility to specialized tools (Adobe Photoshop being a prime example) is often a major deciding factor.

This is a big assumption, but I suspect that it is also the correct one. Google has created an ecosystem for young developers and companies to establish themselves in an emerging market, rather than trying to compete against multi-billion dollar companies that are already well established in the Windows world, and in the process awakened an interest in re-imagining those products without the constraints of sunk costs and million line code bases. Give them a more powerful set of CPUs and GPUs than exists at the low end, an architecture that abstracts away a lot of different hardware differences, and a hungry market, and these developers will likely create the next Photoshop, or Office, or whatever, one that takes advantage of the web and cloud computing (parallel virtualized servers) to do the heavy lifting.

3D graphics are a prime example of this. Most 3D suites in the PC world have two modes - a modeling mode where the artist sets up the initial meshes with low resolution shading (something that can be readily done with a decent GPU) and then a render phase that does the calculations to provide much higher resolution images with various light vector analysis and filtering. This latter phase is eminently suited for cloud computing, and if such was built in from the start, those renderings could be done relatively seamlessly, with changes in state in the model being transmitted directly to the server. In this way processor intensive applications offload most of that cost to external dedicated systems more or less in real time.

There is, of course, always the question of connectivity. If you do not have Internet connectivity, then you can't do that processing. Again, I'd question whether that is all that significant an issue today, and whether this again is simply a lack of recognition about the power of decoupling. Most applications today either are connectivity apps of some sort anyway, or they are apps that have the above split between pre-process work and in-process work. I can write a blog post or a chapter right now within Chrome and save that information to local storage if there is no Internet, then transmit those updates as a background process to the server. I can write code and even have it debugged on a local device, but have it actually run on an instance in the cloud. In some respects, we're returning to a batch processing model of yore, but whereas in the 1960s and 70s such batch processing was with code, in the '10s the "coding" IS the visible application and the batching is the updating or heavy processing that's now done with virtual instances.

Given this, a channel friendly, high margin laptop based on Chrome is not really that hard a pill to take. It's audience is going to be the same people who put down $1500 for a "game machine" - developers, gamers looking for an experience in a new medium, people who push their systems to the limit. Distribution channels like these both because the margins on these are usually pretty good, and because the core audience are people who do not have any qualms a couple of thousand dollars for a "work" machine. These will be the people writing the new software for it, and given the explosion of app developers and the various Android platforms to date, they will see this as a tremendous new opportunity.

It's also a shot just below the waterline for Microsoft, which is having difficulty in getting traction for either Windows 8 or Surface, its own hardware play in the tablet space. Part of this has to do with the fact that it is easy to dismiss mobile apps as "toys" - so Microsoft's approach was to essentially port the Windows DNA into a tablet device. The problem is that the Windows DNA was developed mostly before the rise of cloud computing and mobile devices, and consequently still seeks to put as much of the computing onus on the desktop or laptop system as possible, rather than truly embracing a hybrid strategy. This means that those developing Windows 8 platform apps for mobile will be trying to do this conversion from already existing application bases, which in turn will mean longer time to market even as Android app delivery times shrink.

For Apple, this could be a problem as well, though it could also be an opportunity. Apple really has comparatively little difficulty in being #2 in a market. It's less expensive to defend, you tend to build up a loyalist base that the #1 often doesn't have because they are trying a one-sized fits all strategy, and when there are ebb times for the company (as I believe there are now as it goes through a transition from Steve Jobs to Tim Cook) it provides a chance for the company to reorganize, and to be the "innovation leader". Apple will likely end up in a similar position to Google in the mobile space that it had for years to Microsoft in the PC world. (Yes, Apple is currently still #1 in tablets, but I do not expect that position to last beyond 2013). Given that Google is likely not making that much money selling hardware (and really can't, given it's Android licensing strategy) Apple can actually take advantage of Google's presence to keep Microsoft locked out of the mobile/pad/tablet space while making more money on hardware sales.

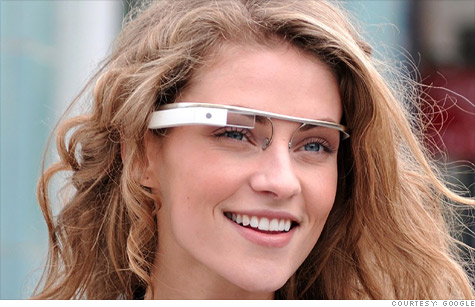

If Google's move with Pixel was largely strategic in establishing a new baseline platform for developers, it's announcement of Google Glass was both surprisingly quiet and far reaching. I have, in previous columns and elsewhere, talked about what a game change a glasses display really is. I believe in the long term they will be far more transformative than even the iPhone, and possibly the PC itself.

I think that the first ones will likely be fairly limited in terms of functionality, if what I see with Google Glass is any indication, but even those functions are impressive - the ability to take photos and videos of what you are seeing, anywhere, anytime, without needing to carry around a camera (or a phone) by itself makes this a radical innovation. The ability to search for information at a moment's notice, either through voice commands or via QCodes or bar codes, makes them indispensible aids for everything from recognizing a person's face or getting detailed information about them while in conversation to doing real time operations (from repairing a car to open heart surgery) with informatics supplied in real time. Alerts can set up reminders, opens up the way for real time conferencing and chat, would make translations on the fly feasible without having to constantly consult the mobile phone box.

This doesn't even begin to touch the broader data access and data collection capabilities - again, those capabilities that arise when you use the glasses as a peripheral and let the real processing take place on the cloud. Consider for instance an autoread capability. You look at a printed document (a book, manual or newspaper, fo instance) quickly, taking photos on the fly as you scan through it. Each photo gets sent to a service, is OCR'd, the text is then collected in a queue along with the associated records (with indexed links back to the images), and later you can then read the text output or have a text-to-speech application (again located on the server) read the document back to you.

You go to a meeting, one person white boards a business plan or software architecture, you capture both video and stills, convert the layout into skeletal graphics that can then be turned into finished graphics later on in a more capable environment, build a report that can then be referenced in the next meeting or for other audiences.

With geolocation and network proximity capabilities added into the mix. I'm thinking that the keyhole markup language (KML), the markup language for Google Earth and Google Maps, will play a big part here. Subscribing to a KML channel will let you download feeds in which proximal triggers - being within a certain designated area or within proximity to a given placemark would display messages - text, image, video, audio - that would be relevant to that area, with closer placemarks being higher resolution and farther placemarks being fuzzier and fainter. Orientation also plays a factor - audio is louder when you are facing it, and fades away as you move away. This would make it possible to create geo-information tours, seeing images and annotations as you come into their proximity. Some of this may be of interest to history and art buffs, but I see its bigger use being a way to catalog resources, building uses, scheduled events and so forth (as well as for geo-based gaming, though the technology will likely have to improve somewhat).

I suspect that this with barcodes or qcodes will change the retail experience beyond all recognition. Even with bar code readers, you can walk into a grocery store, pick up an object, look at its bar code to scan it and put it in your basket. Pricing signage and labeling becomes obsolete. When you get to the door (or at any time, for that matter) you can assent to the purchase of all items in the queue, the items are charged against your account after coupons are applied (which can be scanned asynchronously beforehand) and you leave. You may still have baggers, but cash registers become increasingly anachronistic. Fast food restaurants and drive-throughs works in similar ways - you can see what's available, order it, purchase it and have it waiting, along with the requisite upsell items suggested, all without ever waiting in a line.

Combine this with RFID chips on smart packages, and not only do you effectively know where everything is, from the shoes you bought to the loaf of bread, but you can also track inventory levels and spoilage, and can even tell whether or not the dishes in the dishwasher are clean or dirty. RFID badges at conferences allow you to know when events are scheduled, where they are (and how to get there), who you are talking to (and logging that person for later retrieval of their data, from their bio to the panels they are speaking at to the papers that they wrote. Because glasses themselves have wireless communication, they also can effectively double as badges themselves.

Such glasses will actually be the death knell of the smart phone, though not of the handheld. Indeed, for a while I suspect that the handheld and the tablet will in fact be the equivalent of a mid-tier server for smart phones, and there are form factors for which tablets of various sizes make far more sense than glasses. However, telecommunications won't be one of these, if only because palm-sized devices generally must be used as phonesets for voice communication, which in turn means they can't be used as computers.

On the other hand, it's not hard to imagine a blue tooth adapter that would automatically connect to glasses when you get in your car or truck, turning the glasses into a heads-up-display. This is part of the broader evolution of the car itself into a mobile platform that would not only contain "traditional" information such as velocity, fuel gauges and RPMs but would also would be able to give you the ability to plug into forward, side, and rear mounted cameras, get diagnostics information about the car itself (tire pressures, engine heat, electrical system integrity) and provide navigational assistance and dynamic maps. It also ties into security - the car won't start or even open its doors without relevant password keys and the physical proximity of the glasses, though it can be opened from an app with the relevant keys, for those cases where you lock your glasses in the car.

Additionally, (though this doesn't apply to Google Glass yet, since it is essentially a mounted monocle at the moment) three dimensional visualization becomes truly possible if you have two such glasses set up for parallax viewing or recording. This isn't just a neat visual effect, by the way - parallax cameras mounted on a car give the car's processor depth perception which can be augmented with radar or laser systems. In effect, the glasses can be used with external rigs (such as a car) to allow switching between normal vision, radar, night vision, IR and eventually modeled vision.

One final point - I watch my two teenage daughters interact with their peers through screen based media, and expect full well that this will carry over into the "comps" (computer glasses) realm, though not necessarily in ways that might be expected. My suspicion is that at least for a while, glasses will augment handhelds, and these will still be the primary means for entering text data. The next logical device is a glove, which can be used with virtual keyboards. Texting has an advantage over audio in a number of ways for asynchronous communication, and even with visual and audio sharing, I do not see text input withering away.

The point with all of these is that "comps" - computer glasses - provide the entry point into augmented reality. They hit the two big sensory input areas - sight and sound - and combine them with proximity and time-based information, access to cloud based services and social media streams. The app space potential for this medium is also huge, as the form factor involved makes all kinds of apps feasible that were at best awkward and at worst unthinkable with other interfaces.

Short term, I think Google Glass should be in huge demand among the early adopter crowd, even though I believe that the interfaces I've seen so far are primitive compared to where they will be in 3-5 years. However, this is also a good time to become familiar with the form factor, as I suspect it will be a radical shift from the way things are now. The biggest challenge I had in writing this article was in NOT coming up with all kinds of novel use cases for them, as I could spend days doing so.

Kurt Cagle is an author and information architect for Avalon Consulting, LLC. His latest book HTML5 Graphics with SVG and CSS3, will be available from O'Reilly Media soon.

A website for exploring the Semantic Web, Data Modeling, Asset Management and XML Data Management.

Sunday, February 24, 2013

Sunday, February 17, 2013

Semantics and Airline Flight

|

| Airline Routing Maps (courtesy United Airlines) |

Relational databases, for instance, are remarkably good for those situations where your data is regular, and where you have clearly defined relational keys between "flat" objects, where there is comparatively little variability in data structures, and typically where relevant data is contained within a single data store. This still describes the majority of use cases even in today's world, and as such it is unlikely that relational (RDBM) systems are going to stop being used any time soon.

Document and hash stores provide a second way of organizing data, and these generally work best when the data in question has greater variability in structure, is hierarchical, and can be organized as a discrete entity. XML and JSON are both good tools for visualizing this kind of information, where XML works better when the structures in question are largely narrative in nature (and you have the need for text interspersed with content), while JSON is increasingly seen as being useful for those situations where you are communicating primarily property information or sequences of same.

Such document stores typically index using name/value pairs, but also have effectively denormalized part of the underlying structure along "preferred" axes, in effect transferring the load of normalization from the document database to the application user. This in turn makes these formats superior for data transfer as well, especially since they have no formal requirements for transmitting the schema of such documents, not typically the case with relational databases.

Semantic Databases (triple stores, quad stores, or named graph databases) fall into a somewhat different category, and are in effect a form of columnar rather than record oriented databases. In this case, most data in the database can be thought of as a triple consisting of record identifier, a table column name and a column value where the two intersect, with that column value in turn being either a key to another record identifier or an atomic value.

| AirportID | Label | Status | RoutesTo |

|---|---|---|---|

| SEA | Seattle Tacoma Airport | Active | SFO |

| SEA | Seattle Tacoma Airport | Active | LAX |

| SFO | San Francisco Airport | Active | SEA |

| SFO | San Francisco Airport | Active | LAX |

| LAX | Los Angeles Airport | Active | SEA |

| LAX | Los Angeles Airport | Active | SFO |

If we use <airport:SFO> to identify a given airport (San Francisco International), <airport:routesTo> to identify the column that contains routing information and <airport:SEA> to contain the identifier reference to another airport (here Seattle-Tacoma International Airport), then the set:

<airport:SFO> <airport:routesTo> <airport:SEA>.

creates an assertion that can be read as "SFO routes to SEA". This is what a Semantic Triple Store deals with.

Note that there is a fair amount of redundancy in most relational databases. Indeed, technically the above should properly be two data sets:

| RecordID | AirportSign | Label | Status | AirportRouteKey |

|---|---|---|---|---|

| 1 | SEA | Seattle Tacoma Airport | Active | 101 |

| 2 | SFO | San Francisco Airport | Active | 102 |

| 3 | LAX | Los Angeles Airport | Active | 103 |

| RecordID | AirportRouteKey | AirportRecordID |

|---|---|---|

| 1 | 101 | 2 |

| 2 | 101 | 3 |

| 3 | 102 | 1 |

| 4 | 102 | 3 |

| 5 | 103 | 1 |

| 6 | 103 | 2 |

Such normalization makes it easier to perform joins internally, but at the cost of both lucidity and redundancy of results - and of the complexity of writing the corresponding queries.

The Semantic approach tends to take a sparser style, making the identifier for a given resource it's own URI, and identifying links through similar URIs. It does do by breaking tables into individual columns and then mapping the relationships between the resource identifier subject and the object of the column property, as described above. However, it also has the effect of shifting the thought away from Tables and towards the notion of distinct kinds of resource. We first have the concept of airports, and their connectedness:

<airport:SEA> <rdf:type> <class:Airport>. <airport:SFO> <rdf:type> <class:Airport>. <airport:LAX> <rdf:type> <class:Airport>. <airport:SEA> <airport:routesTo> <airport:SFO>. <airport:SEA> <airport:routesTo> <airport:LAX>. <airport:SFO> <airport:routesTo> <airport:SEA>. <airport:SFO> <airport:routesTo> <airport:LAX>. <airport:LAX> <airport:routesTo> <airport:SEA>. <airport:LAX> <airport:routesTo> <airport:SFO>.However, these routes - supplied here as a verb, can also be manifest as objects (route objects) in their own rights, which makes certain types of computations easier.

<route:SEASFO> <rdf:type> <class:Route>. <route:SEALAX> <rdf:type> <class:Route>. <route:SFOLAX> <rdf:type> <class:Route>. <route:SFOSEA> <rdf:type> <class:Route>. <route:LAXSFO> <rdf:type> <class:Route>. <route:LAXSEA> <rdf:type> <class:Route>.<route:SEASFO> <route:fromAirport> <airport:SEA>. <route:SEASFO> <route:toAirport> <airport:SFO>. <route:SEALAX> <route:fromAirport> <airport:SEA>. <route:SEALAX> <route:toAirport> <airport:LAX>. <route:SFOLAX> <route:fromAirport> <airport:SFO>. <route:SFOLAX> <route:toAirport> <airport:LAX>. <route:SFOSEA> <route:fromAirport> <airport:SFO>. <route:SFOSEA> <route:toAirport> <airport:SEA>. <route:LAXSFO> <route:fromAirport> <airport:LAX>. <route:LAXSFO> <route:toAirport> <airport:SFO>.

where there's a relationship that can be defined in SPARQL:

CONSTRUCT {?airport1 <airport:routesTo> ?airport2} WHERE

{

?route <rdf:type> <class:Route>.

?route <route:fromAirport> ?airport1.

?route <route:toAirport> ?airport2.

}

This idea of construction is important - you can think of it as being a formal statement or definition of a given term in a taxonomy by using other terms. In this case we're defining the airport:routesTo operation in terms of the route object, making it possible to look at the same problem in two distinct ways.

Arguably, for simple problems, this is probably more work than it's worth. However, what if you're dealing with not so simple problems. For instance, consider a typical routing problem - you are at an airport in Seattle (SEA) and you want to get to an airport in Chicago (ORD - O'Hare). There may be a single connecting flight ... but then again, there may not be. In this case, you have to take one or more connecting flights. It's at this level that this particular problem becomes considerably harder, because you are at that point dealing with graph traversal. With three airports, you have six potential routes. With four airports, you have up to 24. By the time you get up to ten airports you're dealing with 3,628,800 potential routes. This also becomes more complex because you have to then cull out of those three million plus routes all circular subroutes - routes which create loops - and you have to then optimize this so that the passengers involved spend as little time in the air as possible, both for the passenger's comfort, and because you're consuming more fuel with every mile that passenger travels.

Playing with the model a bit, a couple of other assumptions come into play, one being that it should be possible to attach one or more flights to a given route. The multiple flights may leave at different times of the day, may be different aircraft and so forth. For purposes of this particular article, a few simplifying assumptions are made - first, a flight has a certain flight time that will be used to determine best flight paths (and could indirectly be used to determine ticket costs, though that's not covered). Additionally, it's preferable to order trips first by the number of flights it takes to get from A to B, and secondarily by transit time. Finally, this simulation will only provide solutions for up to four flights in a given trip, though generalizing it is not hard.

Assignments of flights to routes are straightforward:

<route:SEASFO> <route:flight> <flight:FL1>. <route:SFOLAX> <route:flight> <flight:FL2>. <route:LAXDEN> <route:flight> <flight:FL3>. <route:SFOLAS> <route:flight> <flight:FL4>. <route:LAXLAS> <route:flight> <flight:FL5>.

Similarly flight times (in hours) are given as:

<flight:FL1> <flight:flightTime> 2.0. <flight:FL2> <flight:flightTime> 2.0. <flight:FL3> <flight:flightTime> 3.0. <flight:FL4> <flight:flightTime> 1.4. ...

Three more conditions are modeled. First, a given flight can be cancelled. This effectively takes the flight out of the routing path, which can impact several potential paths. Similarly, an airport can close for some reason, such as adverse weather conditions, forcing different routings. The biggest condition, at least for this simulation, is that this does not take into account scheduling, insuring that a given flight is available. This can also be modeled semantically (and is not really all that hard) but it is outside of the scope of this article.

With these conditions, it's possible to create a SPARQL query that will show, for a given trip between two airports, the potential paths that are available, the number of flights needed in the total trip, and the total time for that trip.

SELECT DISTINCT ?airportSrc ?airport2 ?airport3 ?airport4 ?airportDest ?flight1 ?flight2 ?flight3 ?flight4 ?numFlights ?flightTime WHERE {

?airportSrc <owl:sameAs> <airport:SEA>.

?airportDest <owl:sameAs> <airport:ORD>.

?airportSrc <airport:status> <status:Active>.

?airportDest <airport:status> <status:Active>.

{?airportSrc <airport:routesTo> ?airportDest.

?route1 <route:fromAirport> ?airportSrc.

?route1 <route:toAirport> ?airportDest.

?route1 <route:flight> ?flight1.

?flight1 <flight:flightTime> ?flightTime.

?flight1 <flight:status> <status:Active>.

BIND ((1) as ?numFlights)

}

UNION

{?airportSrc <airport:routesTo> ?airport2.

?airport2 <airport:routesTo> ?airportDest.

?airportSrc <airport:status> <status:Active>.

?airport2 <airport:status> <status:Active>.

?airportDest <airport:status> <status:Active>.

?route1 <route:fromAirport> ?airportSrc.

?route1 <route:toAirport> ?airport2.

?route1 <route:flight> ?flight1.

?flight1 <flight:flightTime> ?flightTime1.

?route2 <route:fromAirport> ?airport2.

?route2 <route:toAirport> ?airportDest.

?route2 <route:flight> ?flight2.

?flight2 <flight:flightTime> ?flightTime2.

?flight1 <flight:status> <status:Active>.

?flight2 <flight:status> <status:Active>.

BIND((?flightTime1 + ?flightTime2) AS ?flightTime)

BIND ((2) as ?numFlights)

}

UNION

{?airportSrc <airport:routesTo> ?airport2.

?airport2 <airport:routesTo> ?airport3.

?airport3 <airport:routesTo> ?airportDest.

?airportSrc <airport:status> <status:Active>.

?airport2 <airport:status> <status:Active>.

?airport3 <airport:status> <status:Active>.

?airportDest <airport:status> <status:Active>.

?route1 <route:fromAirport> ?airportSrc.

?route1 <route:toAirport> ?airport2.

?route1 <route:flight> ?flight1.

?flight1 <flight:flightTime> ?flightTime1.

?route2 <route:fromAirport> ?airport2.

?route2 <route:toAirport> ?airport3.

?route2 <route:flight> ?flight2.

?flight2 <flight:flightTime> ?flightTime2.

?route3 <route:fromAirport> ?airport3.

?route3 <route:toAirport> ?airportDest.

?route3 <route:flight> ?flight3.

?flight3 <flight:flightTime> ?flightTime3.

?flight1 <flight:status> <status:Active>.

?flight2 <flight:status> <status:Active>.

?flight3 <flight:status> <status:Active>.

BIND((?flightTime1 + ?flightTime2 + ?flightTime3) AS ?flightTime)

BIND ((3) as ?numFlights)

FILTER (?airportSrc != ?airport3)

FILTER (?airportDest != ?airport2)

}

UNION

{?airportSrc <airport:routesTo> ?airport2.

?airport2 <airport:routesTo> ?airport3.

?airport3 <airport:routesTo> ?airport4.

?airport4 <airport:routesTo> ?airportDest.

?airportSrc <airport:status> <status:Active>.

?airport2 <airport:status> <status:Active>.

?airport3 <airport:status> <status:Active>.

?airport4 <airport:status> <status:Active>.

?airportDest <airport:status> <status:Active>.

?route1 <route:fromAirport> ?airportSrc.

?route1 <route:toAirport> ?airport2.

?route1 <route:flight> ?flight1.

?flight1 <flight:flightTime> ?flightTime1.

?route2 <route:fromAirport> ?airport2.

?route2 <route:toAirport> ?airport3.

?route2 <route:flight> ?flight2.

?flight2 <flight:flightTime> ?flightTime2.

?route3 <route:fromAirport> ?airport3.

?route3 <route:toAirport> ?airport4.

?route3 <route:flight> ?flight3.

?flight3 <flight:flightTime> ?flightTime3.

?route4 <route:fromAirport> ?airport4.

?route4 <route:toAirport> ?airportDest.

?route4 <route:flight> ?flight4.

?flight4 <flight:flightTime> ?flightTime4.

?flight1 <flight:status> <status:Active>.

?flight2 <flight:status> <status:Active>.

?flight3 <flight:status> <status:Active>.

?flight4 <flight:status> <status:Active>.

BIND((?flightTime1 + ?flightTime2 + ?flightTime3 + ?flightTime4) AS ?flightTime)

BIND ((4) as ?numFlights)

FILTER (?airportSrc != ?airport3)

FILTER (?airportSrc != ?airport4)

FILTER (?airportDest != ?airport2)

FILTER (?airportDest != ?airport3)

FILTER (?airport2 != ?airport4)

}

} ORDER BY ?numFlights ?flightTime

The SELECT DISTINCT statement instructs the processor to ignore duplicate returns, then identifies which SPARQL fields should be displayed or converted to external output. ?airportSrc and ?airportDest are the source and destination airports, with ?airportN indicating the Nth airport if it's not one of these two. The next statements

?airportSrc <owl:sameAs> <airport:SEA>.

?airportDest <owl:sameAs> <airport:ORD>.

identify the source and destination airports for the query, and in most cases would probably be passed parametrically. A condition is then placed to insure that both the source and destination airport are active (the query will return no paths if either airport is closed, as would be expected).

After this, there are four distinct conditions, correspond to one flight through four flights. The second is suggestive of the assertions invoked:

UNION

{?airportSrc <airport:routesTo> ?airport2.

?airport2 <airport:routesTo> ?airportDest.

?airportSrc <airport:status> <status:Active>.

?airport2 <airport:status> <status:Active>.

?airportDest <airport:status> <status:Active>.

?route1 <route:fromAirport> ?airportSrc.

?route1 <route:toAirport> ?airport2.

?route1 <route:flight> ?flight1.

?flight1 <flight:flightTime> ?flightTime1.

?route2 <route:fromAirport> ?airport2.

?route2 <route:toAirport> ?airportDest.

?route2 <route:flight> ?flight2.

?flight2 <flight:flightTime> ?flightTime2.

?flight1 <flight:status> <status:Active>.

?flight2 <flight:status> <status:Active>.

BIND((?flightTime1 + ?flightTime2) AS ?flightTime)

BIND ((2) as ?numFlights)

}

This assumes three airports:?airportSrc, ?airport2 and ?airportDest are involved. The <airport:routesTo> predicate statements retrieve the set of all airports (?airport2) that both are from ?airportSrc and to ?airportDest. These in turn determine the two routes used, and once you know the route, you can retrieve the flight(s) associated with that route. Once you have the flight, you can determine the flight time. The first BIND statement adds the two flight times together and places the result in the ?flightTime variable. The second BIND then sets the ?numFlights variable to the value 2, since there are two flights.

Things get a bit more complicated once you move beyond two flights. With three flights, there is a possibility for circular references - the trip may circle back to the a previous airport before making the jump to the final airport. It is possible (albeit fairly complicated) to short circuit such paths, but for purposes of this demo, I just checked all potential match configurations and used a FILTER to remove those paths for which this occurred. For example, with four flights, you have the following configurations, assuming that Src and Dest are not the same:

FILTER (?airportSrc != ?airport3)

FILTER (?airportSrc != ?airport4)

FILTER (?airportDest != ?airport2)

FILTER (?airportDest != ?airport3)

FILTER (?airport2 != ?airport4)

After these are determined, the resulting ntuple results are ordered first by the number of flights, then by the flight time:

} ORDER BY ?numFlights ?flightTime

This produces (in Jena or similar Triple Store) a table that will look something like this:

airportSrc

|

airport2

|

airport3

|

airport4

|

airportDest

|

flight1

|

flight2

|

flight3

|

flight4

|

numFlights

|

flightTime

|

|---|---|---|---|---|---|---|---|---|---|---|

<airport:SEA>

|

<airport:ORD>

|

<flight:FL17>

|

"1"

|

"7.1"

|

||||||

<airport:SEA>

|

<airport:DEN>

|

<airport:ORD>

|

<flight:FL7>

|

<flight:FL8>

|

"2"

|

"8.0"

|

||||

<airport:SEA>

|

<airport:LAX>

|

<airport:ORD>

|

<flight:FL25>

|

<flight:FL12>

|

"2"

|

"10.5"

|

||||

<airport:SEA>

|

<airport:DEN>

|

<airport:STL>

|

<airport:ORD>

|

<flight:FL7>

|

<flight:FL21>

|

<flight:FL19>

|

"3"

|

"8.4"

|

||

<airport:SEA>

|

<airport:SFO>

|

<airport:LAS>

|

<airport:ORD>

|

<flight:FL1>

|

<flight:FL4>

|

<flight:FL6>

|

"3"

|

"8.8"

|

||

<airport:SEA>

|

<airport:SFO>

|

<airport:LAX>

|

<airport:ORD>

|

<flight:FL1>

|

<flight:FL2>

|

<flight:FL12>

|

"3"

|

"10.2"

|

||

<airport:SEA>

|

<airport:LAX>

|

<airport:LAS>

|

<airport:ORD>

|

<flight:FL25>

|

<flight:FL5>

|

<flight:FL6>

|

"3"

|

"10.9"

|

||

<airport:SEA>

|

<airport:LAX>

|

<airport:STL>

|

<airport:ORD>

|

<flight:FL25>

|

<flight:FL23>

|

<flight:FL19>

|

"3"

|

"11.2"

|

||

<airport:SEA>

|

<airport:LAX>

|

<airport:STL>

|

<airport:ORD>

|

<flight:FL25>

|

<flight:FL24>

|

<flight:FL19>

|

"3"

|

"11.2"

|

||

<airport:SEA>

|

<airport:LAX>

|

<airport:DEN>

|

<airport:ORD>

|

<flight:FL25>

|

<flight:FL3>

|

<flight:FL8>

|

"3"

|

"11.8"

|

||

<airport:SEA>

|

<airport:SFO>

|

<airport:LAX>

|

<airport:LAS>

|

<airport:ORD>

|

<flight:FL1>

|

<flight:FL2>

|

<flight:FL5>

|

<flight:FL6>

|

"4"

|

"10.6"

|

<airport:SEA>

|

<airport:SFO>

|

<airport:LAS>

|

<airport:LAX>

|

<airport:ORD>

|

<flight:FL1>

|

<flight:FL4>

|

<flight:FL11>

|

<flight:FL12>

|

"4"

|

"10.8"

|

<airport:SEA>

|

<airport:SFO>

|

<airport:LAX>

|

<airport:STL>

|

<airport:ORD>

|

<flight:FL1>

|

<flight:FL2>

|

<flight:FL23>

|

<flight:FL19>

|

"4"

|

"10.9"

|

<airport:SEA>

|

<airport:SFO>

|

<airport:LAX>

|

<airport:STL>

|

<airport:ORD>

|

<flight:FL1>

|

<flight:FL2>

|

<flight:FL24>

|

<flight:FL19>

|

"4"

|

"10.9"

|

<airport:SEA>

|

<airport:SFO>

|

<airport:LAX>

|

<airport:DEN>

|

<airport:ORD>

|

<flight:FL1>

|

<flight:FL2>

|

<flight:FL3>

|

<flight:FL8>

|

"4"

|

"11.5"

|

<airport:SEA>

|

<airport:LAX>

|

<airport:DEN>

|

<airport:STL>

|

<airport:ORD>

|

<flight:FL25>

|

<flight:FL3>

|

<flight:FL21>

|

<flight:FL19>

|

"4"

|

"12.2"

|

<airport:SEA>

|

<airport:LAX>

|

<airport:SFO>

|

<airport:LAS>

|

<airport:ORD>

|

<flight:FL25>

|

<flight:FL10>

|

<flight:FL4>

|

<flight:FL6>

|

"4"

|

"13.1"

|

<airport:SEA>

|

<airport:LAX>

|

<airport:STL>

|

<airport:DEN>

|

<airport:ORD>

|

<flight:FL25>

|

<flight:FL23>

|

<flight:FL20>

|

<flight:FL8>

|

"4"

|

"15.7"

|

<airport:SEA>

|

<airport:LAX>

|

<airport:STL>

|

<airport:DEN>

|

<airport:ORD>

|

<flight:FL25>

|

<flight:FL24>

|

<flight:FL20>

|

<flight:FL8>

|

"4"

|

"15.7"

|

<airport:SEA>

|

<airport:DEN>

|

<airport:STL>

|

<airport:LAX>

|

<airport:ORD>

|

<flight:FL7>

|

<flight:FL21>

|

<flight:FL22>

|

<flight:FL12>

|

"4"

|

"16.1"

|

Note that this app doesn't concern itself with whether a route is feasible (going from Seattle to Saint Louis to Los Angeles before going to O'Hare in Chicago doesn't really make a lot of sense, for instance) but the sorting indicates that this is probably the least optimal path to take.

A full scale logistical application would certainly take into account several other factors - scheduling, seat availability, specific aircraft bindings, windspeed, ticket costs and the myriad dozens of other variables that are involved in any type of logistics problem, but the point here is not to build such an application ... it is only to show that such is possible through the use of semantic databases.

Next week, I hope to take this SPARQL application and show how it can then be bound to both a MarkLogic and node.js web application.

Kurt Cagle is an author and information architect for Avalon Consulting, LLC. His latest book HTML5 Graphics with SVG and CSS3, will be available from O'Reilly Media soon.

Tuesday, February 12, 2013

Why Your Next Programming Language Will Be JavaScript

Once upon a time - okay, about 1996 or so - there was a race for dominance of this new thing called the World Wide Web. On one side was Microsoft, having firmly staked its ground a few years before with the introduction of Windows 3.0. On the other side was a little startup called Netscape that had only one product - a web browser - that it had been beta testing for a couple of years for free but was now faced with trying to get people to actually pay for said browser. So one of the programmers there, a bright guy by the name of Brendan Eich, came up with a novel concept - a simplified scripting language that borrowed some of the syntax from the new Java language that Sun, just down the road in the Bay Area, had debuted a few months earlier, added in a few constructs that were a bit groundbreaking, and made it possible to alter the fields of the new HTML form controls that had just come out.

The first versions were, like much of the web at that time, laughably crude. However, they paved the way for a fairly radical notion - that the document object model of a page could be manipulated. That manipulation was initially very limited, partially because there were few things that JavaScript could act on, and partially because the language itself was rather painfully slow. Saying you were an expert in JavaScript was a good way to not get a job, when the big money was going to C++ and Java developers that were writing real code.

Eighteen years later, things have changed rather radically. Several major innovations have occurred in the JavaScript world, and these have significantly shaken things up.

AJAX and JSON. The ability to communicate between the client and server out of band (out of the initial load from the server) made it possible to turn the web browser into a direct communication portal with a potentially large data store, in effect establishing the notion that the client need not be restricted to a read-only view of the universe. AJAX also made the idea of using serialized JavaScript objects - combinations of atomic primitives, hash maps and arrays - as data transport protocols feasible. In this regard, it may very well have short circuited the SOAP-based services oriented architecture model and usurped XML's role as a data transport language.

jQuery. One of the biggest challenges that any web developer faced since the introduction of JavaScript has been the fact that there have been, at any given time, at least two and in some cases four or five different but similar APIs for working with web browsers, depending upon the vendor of that browser. This made developing applications painful, and kept cross platform applications to a minimum. After AJAX sparked renewed interest in the language, however, one side effects was the emergence of different JavaScript libraries that provided an abstraction layer to the interfaces, so that you could write to the library and not the browser. A number of these floated around for a while, but by 2011 or so jQuery had become the evident leader, and the concept of JavaScript as web "virtual machine" began to inch closer to reality.

Functional JavaScript. The jQuery libraries in turn forced a rapid evolution in both the capabilities of JavaScript, and in the way that JavaScript itself was written. Gone were the days when JavaScript applications looked like Java applications; the language was now far more functionally oriented, asynchronous and built around callbacks. It truly was an event-driven language, and several other declarative languages that had until comparatively recently found more success in academia than in the commercial world - Haskell, Ocaml, Scheme, Miranda - ended up getting pillaged for ideas in taking JavaScript to the next level - with the use of monads, functions as first class objects, closures and so forth. As a consequence, the language itself took on a flavor that, while outwardly mirroring the syntax of Java, C and other Algol based languages, actually was moving towards a declarative model that made for some incredibly powerful programming ... and not coincidentally towards a model that was far more efficiently implementable.

The Power of V8. Google released the first Chrome browser in 2010, and in the space of three years managed to do what Firefox, the reincarnated version of Netscape Mozilla, never quite managed to accomplish - it shoved Microsoft out of browser dominance, and in the process laid the groundwork for a full frontal assault on the software giant. One of the key pieces of that strategy was the re-engineering of the JavaScript engine, using virtualization techniques and some incredible design efficiency to make JavaScript not just a bit faster, but comparable in speed to compiled languages such as Java or C++. This, coupled with similar changes in the browser DOM, set off a mad rush by the other vendors to overhaul their JavaScript engines, and all of a sudden, JavaScript-based web applications were becoming sufficiently sophisticated that they made stand-alone applications begin to look positively dowdy in comparison.

Node.js. This in turn made possible something that had been talked about for years but at best poorly realized - a JavaScript based server platform. While JavaScript had been quietly taking over the browser client space during the 90s and 00s, the server side has seen a plethora of languages come and go - Perl, ASP, PHP, Python, Ruby on Rails, ASP.NET, the list was fairly long and made for fiercely partisan camps in that space. However, after thinking through a lot of the philosophy on threading, closures, and asynchronous development, Ryan Dahl took the V8 chrome engine and used it as the core for a generalized server language in 2009. This engine caught on rapidly - partially because there were a lot of developers who were used to working with node.js on the client, and found that the same toolsets and skills that worked there could then be applied to the server side. As a side effect, the number of JavaScript libraries exploded, taking advantage of an installation mechanism (npm) that mirrored Ruby gems. Node.js was also more than just a web scripting language - it could be used for running scripts that could work on internal system objects and communicated with the next major shift in development, the rise of NoSQL databases.

NoSQL. The 1990s saw the rise of the relational database, and traditional SQL databases played a big part in the way data was stored through the late 00s. However, one of the major side effects of AJAX was a fairly fundamental shift in the way that data was treated. Prior to then, most applications communicated with a SQL database through some kind of recordset abstraction layer, and the 90s especially were littered with three letter data access layer acronyms. However, one of the things that began to emerge once AJAX came into play was that data was seldom flat - it had structure and both clearly defined and not-so definitive joins of various kinds, and more often than not more closely resembled a document rather than simply a bag of property values. About this same time, data in motion really began to become more broadly important than data at rest, and for that reason the denormalization of data that was typically involved with such structures began to seem archaic when those structures already had clearly defined, and multi-tiered, layers.

NoSQL databases - a wide gamut of non-traditional databases including XML, JSON, name/value, columnar, graph and RDF stores - had been percolating in the background during much of the 00s. As system performance improved, these stores (which usually embedded not just single tables but whole DOMs) began to relied upon, first for document-centric information, then later for data-centric. AJAX gave them a much needed push, as being able to store complex data structures directly without having to reconstruct implicit joins reduced the overall data access burden considerably, and also moved much of the computing world to a more resource oriented (or RESTful) mode of operation.

Many of these systems are designed to work with JSON in one form or another, and increasingly JavaScript forms at least part of the query and update chain. Additionally, even in XML data stores, the endpoints are becoming JSONified. Efforts are underway to take the powerful but underutilized XQuery language and make a JavaScript binding layer to it as well.

Map/Reduce. Google's search capabilities are made possible primarily by creating massive indexes of the web. The technique for building these indexes in turn comes from a powerful application of a concept called map/reduce, in which incoming data (such as web pages) is mapped into large blocks, each block is processed for indexing, then the subsequent indexed content is reduced into a single indexed data set. By splitting the content apart then reintegrating, such a process can be transformed into multiple parallel processes, each running on its own real or virtual server space with dedicated processors.

Hadoop, perhaps the most well known of the map/reduce applications, is written in Java. However, increasingly other languages, including most notably node.js have built their own Hadoop-like map/reduce system, and JavaScript, which has a remarkably fluid concept of arrays, objects and functions, is in many respects more ideally suited for M/R type operations - it is a language built upon asynchronous callbacks, dynamically generated objects, functions as arguments and distributed data. Given its ease of use compared to Java, which still retains many artifacts of a strongly typed language, in such environments, JavaScript in node.js has a very good chance of dethroning the Hadoop/Java stack as the preferred mechanism for doing rich M/R.

HTML5, Android and Mobile. The HTML specification was, for all intents and purposes, frozen at version 4.0 in 1997. Several proposals for expanding outliers to the language - a graphical language (or two), CSS for layout, video and audio, new controls and so forth - had been building up in the background, but it was ultimately a decision to create a new version of the specification by outside groups that ended up providing the impetus to try to tie all of these pieces together. The resulting set of technologies was very sophisticated, including capabilities for managing both 2D and 3D graphics, sophisticated enough that it satisfied several key requirements for a graphical user interface ... and put the final nail in the coffin of third party binary plugins for the browser.

Google purchased the Android core set in 2005, and built on top of that for several years until the nascent smart phone market exploded with Apple's introduction of the iPhone in 2007. By the time the first Android phone was released in 2008, it's Java based operating system was firmly set. However, Java for Google is somewhat problematic - it does not control the technology, and the Android OS has a number of vulnerabilities that arise precisely because of the use of Java.

A number of attempts have been made to create a JavaScript layer for the Android OS by third party developers, and it is likely that had the V8 engine been available in 2008 Google would almost have certainly chosen to go with it rather than Java as its build language; a great deal of any App development comes down to binding existing component functionality, rather than creating such functionality, which is usually hardware based, from scratch, a task which JavaScript excels. While Google tends to be tight-lipped about its current development plans, its not inconceivable that Android 5.0 will end up being written around the V8 core and use JavaScript, rather than Java, as its primary language.

It should be noted that in the context, two of the hottest development applications today are Appcelerator Titanium and PhoneGap, both of which provide a JavaScript environment for binding applications together. These platforms are popular primarily because they are remarkably cross platform - you can build the same application for iOS as you can for Android, while objectiveC and Java by their very nature limit you to one or the other.

There are several good reasons to suspect this. One of them is the fact that HTML5 is now capable of producing more sophisticated user interfaces then many app developers have available to them natively. Such apps could be deployed as a mix of local framework shell and just in time application code (which is essentially what a web application is), and these applications could also take advantage of robust third party libraries that could also be utilized within web clients and JavaScript servers, which in turn reduces the overall threshold for the skills an application developer needs to maintain.

Additionally, the same things that make JavaScript advantageous in web and server development - closures, functions as objects, weak typing, dynamic declarative programming, event driven design - are perhaps even more appropriate for mobile devices and sensors than they are for the relatively robust world of web browsers.

Finally, an HTML5/SVG/CSS/JavaScript stack makes for a powerful virtual machine that can run on phones, tablets, traditional computers and most nearly any other device without significant re-engineering from one device to the next. The current architecture for Android gets close, but it still has problems with applications working inconsistently across devices due to low level considerations.

JavaScript as Services Language. Outside mobile, the next major area where JavaScript is set to explode is in the services arena. JSON has been steadily wearing away at the SOAP-based SOA stack for several years now, as well as the publish/subscribe world of RSS and Atom (both of which now have JSON profiles). Web workers and socket.js are reshaping point-to-point protocols. With JavaScript's increasing dominance in the NoSQL sphere, it's a natural language to handle the moderate bandwidth interchange that increasingingly controls the interactive, as opposed to the high speed communication protocols.

These are all reason why I suspect that JavaScript is likely to make its way into the enterprise as perhaps the primary language by decade's end, if not sooner. Most current metrics give JavaScript a fairly low overall position compared to others (Tiobe.com places it at #11), but this is primarily based upon server side usage in the enterprise, where it's use today is still fairly limited, and due to the fact that the two most popular languages right now - Objective C and Java - are there because of the explosion of mobile app development. A V8 engine built into a future version of Android would rocket the use of JavaScript to top tier fairly quickly. The growing trend towards "big data" solutions similarly currently favor Java, but as JavaScript (and node.js in particular) develops its own stack again I think that this language rather than Java will become as pervasive on the server as it is on the web client.

It's also becoming the common tongue that most developers have in their back pocket, because so much of application development requires knowing at least some web development skills. It may not necessarily be a favorite language, but common languages have a tendency to become dominant over time, and as JavaScript increasingly becomes a fixture on the server, it's very likely that it will eventually (and quickly) own the server.

Thus, when I talk to people entering into the information technologies field today about what languages they should be learning, I put JavaScript at the top of that list. Learn JavaScript and node.js, HTML5 and SVG and Canvas, Coffeescript (which is a very cool language that uses JavaScript as it's VM). Learn JavaScript the way that it's used today, as a language that's more evocative of Lisp and Haskell than of Java and C++. It's pervasive, and will only become more so.

The first versions were, like much of the web at that time, laughably crude. However, they paved the way for a fairly radical notion - that the document object model of a page could be manipulated. That manipulation was initially very limited, partially because there were few things that JavaScript could act on, and partially because the language itself was rather painfully slow. Saying you were an expert in JavaScript was a good way to not get a job, when the big money was going to C++ and Java developers that were writing real code.

Eighteen years later, things have changed rather radically. Several major innovations have occurred in the JavaScript world, and these have significantly shaken things up.

AJAX and JSON. The ability to communicate between the client and server out of band (out of the initial load from the server) made it possible to turn the web browser into a direct communication portal with a potentially large data store, in effect establishing the notion that the client need not be restricted to a read-only view of the universe. AJAX also made the idea of using serialized JavaScript objects - combinations of atomic primitives, hash maps and arrays - as data transport protocols feasible. In this regard, it may very well have short circuited the SOAP-based services oriented architecture model and usurped XML's role as a data transport language.

jQuery. One of the biggest challenges that any web developer faced since the introduction of JavaScript has been the fact that there have been, at any given time, at least two and in some cases four or five different but similar APIs for working with web browsers, depending upon the vendor of that browser. This made developing applications painful, and kept cross platform applications to a minimum. After AJAX sparked renewed interest in the language, however, one side effects was the emergence of different JavaScript libraries that provided an abstraction layer to the interfaces, so that you could write to the library and not the browser. A number of these floated around for a while, but by 2011 or so jQuery had become the evident leader, and the concept of JavaScript as web "virtual machine" began to inch closer to reality.

Functional JavaScript. The jQuery libraries in turn forced a rapid evolution in both the capabilities of JavaScript, and in the way that JavaScript itself was written. Gone were the days when JavaScript applications looked like Java applications; the language was now far more functionally oriented, asynchronous and built around callbacks. It truly was an event-driven language, and several other declarative languages that had until comparatively recently found more success in academia than in the commercial world - Haskell, Ocaml, Scheme, Miranda - ended up getting pillaged for ideas in taking JavaScript to the next level - with the use of monads, functions as first class objects, closures and so forth. As a consequence, the language itself took on a flavor that, while outwardly mirroring the syntax of Java, C and other Algol based languages, actually was moving towards a declarative model that made for some incredibly powerful programming ... and not coincidentally towards a model that was far more efficiently implementable.

The Power of V8. Google released the first Chrome browser in 2010, and in the space of three years managed to do what Firefox, the reincarnated version of Netscape Mozilla, never quite managed to accomplish - it shoved Microsoft out of browser dominance, and in the process laid the groundwork for a full frontal assault on the software giant. One of the key pieces of that strategy was the re-engineering of the JavaScript engine, using virtualization techniques and some incredible design efficiency to make JavaScript not just a bit faster, but comparable in speed to compiled languages such as Java or C++. This, coupled with similar changes in the browser DOM, set off a mad rush by the other vendors to overhaul their JavaScript engines, and all of a sudden, JavaScript-based web applications were becoming sufficiently sophisticated that they made stand-alone applications begin to look positively dowdy in comparison.

Node.js. This in turn made possible something that had been talked about for years but at best poorly realized - a JavaScript based server platform. While JavaScript had been quietly taking over the browser client space during the 90s and 00s, the server side has seen a plethora of languages come and go - Perl, ASP, PHP, Python, Ruby on Rails, ASP.NET, the list was fairly long and made for fiercely partisan camps in that space. However, after thinking through a lot of the philosophy on threading, closures, and asynchronous development, Ryan Dahl took the V8 chrome engine and used it as the core for a generalized server language in 2009. This engine caught on rapidly - partially because there were a lot of developers who were used to working with node.js on the client, and found that the same toolsets and skills that worked there could then be applied to the server side. As a side effect, the number of JavaScript libraries exploded, taking advantage of an installation mechanism (npm) that mirrored Ruby gems. Node.js was also more than just a web scripting language - it could be used for running scripts that could work on internal system objects and communicated with the next major shift in development, the rise of NoSQL databases.

NoSQL. The 1990s saw the rise of the relational database, and traditional SQL databases played a big part in the way data was stored through the late 00s. However, one of the major side effects of AJAX was a fairly fundamental shift in the way that data was treated. Prior to then, most applications communicated with a SQL database through some kind of recordset abstraction layer, and the 90s especially were littered with three letter data access layer acronyms. However, one of the things that began to emerge once AJAX came into play was that data was seldom flat - it had structure and both clearly defined and not-so definitive joins of various kinds, and more often than not more closely resembled a document rather than simply a bag of property values. About this same time, data in motion really began to become more broadly important than data at rest, and for that reason the denormalization of data that was typically involved with such structures began to seem archaic when those structures already had clearly defined, and multi-tiered, layers.

NoSQL databases - a wide gamut of non-traditional databases including XML, JSON, name/value, columnar, graph and RDF stores - had been percolating in the background during much of the 00s. As system performance improved, these stores (which usually embedded not just single tables but whole DOMs) began to relied upon, first for document-centric information, then later for data-centric. AJAX gave them a much needed push, as being able to store complex data structures directly without having to reconstruct implicit joins reduced the overall data access burden considerably, and also moved much of the computing world to a more resource oriented (or RESTful) mode of operation.

Many of these systems are designed to work with JSON in one form or another, and increasingly JavaScript forms at least part of the query and update chain. Additionally, even in XML data stores, the endpoints are becoming JSONified. Efforts are underway to take the powerful but underutilized XQuery language and make a JavaScript binding layer to it as well.

Map/Reduce. Google's search capabilities are made possible primarily by creating massive indexes of the web. The technique for building these indexes in turn comes from a powerful application of a concept called map/reduce, in which incoming data (such as web pages) is mapped into large blocks, each block is processed for indexing, then the subsequent indexed content is reduced into a single indexed data set. By splitting the content apart then reintegrating, such a process can be transformed into multiple parallel processes, each running on its own real or virtual server space with dedicated processors.

Hadoop, perhaps the most well known of the map/reduce applications, is written in Java. However, increasingly other languages, including most notably node.js have built their own Hadoop-like map/reduce system, and JavaScript, which has a remarkably fluid concept of arrays, objects and functions, is in many respects more ideally suited for M/R type operations - it is a language built upon asynchronous callbacks, dynamically generated objects, functions as arguments and distributed data. Given its ease of use compared to Java, which still retains many artifacts of a strongly typed language, in such environments, JavaScript in node.js has a very good chance of dethroning the Hadoop/Java stack as the preferred mechanism for doing rich M/R.

HTML5, Android and Mobile. The HTML specification was, for all intents and purposes, frozen at version 4.0 in 1997. Several proposals for expanding outliers to the language - a graphical language (or two), CSS for layout, video and audio, new controls and so forth - had been building up in the background, but it was ultimately a decision to create a new version of the specification by outside groups that ended up providing the impetus to try to tie all of these pieces together. The resulting set of technologies was very sophisticated, including capabilities for managing both 2D and 3D graphics, sophisticated enough that it satisfied several key requirements for a graphical user interface ... and put the final nail in the coffin of third party binary plugins for the browser.

Google purchased the Android core set in 2005, and built on top of that for several years until the nascent smart phone market exploded with Apple's introduction of the iPhone in 2007. By the time the first Android phone was released in 2008, it's Java based operating system was firmly set. However, Java for Google is somewhat problematic - it does not control the technology, and the Android OS has a number of vulnerabilities that arise precisely because of the use of Java.

A number of attempts have been made to create a JavaScript layer for the Android OS by third party developers, and it is likely that had the V8 engine been available in 2008 Google would almost have certainly chosen to go with it rather than Java as its build language; a great deal of any App development comes down to binding existing component functionality, rather than creating such functionality, which is usually hardware based, from scratch, a task which JavaScript excels. While Google tends to be tight-lipped about its current development plans, its not inconceivable that Android 5.0 will end up being written around the V8 core and use JavaScript, rather than Java, as its primary language.

It should be noted that in the context, two of the hottest development applications today are Appcelerator Titanium and PhoneGap, both of which provide a JavaScript environment for binding applications together. These platforms are popular primarily because they are remarkably cross platform - you can build the same application for iOS as you can for Android, while objectiveC and Java by their very nature limit you to one or the other.

There are several good reasons to suspect this. One of them is the fact that HTML5 is now capable of producing more sophisticated user interfaces then many app developers have available to them natively. Such apps could be deployed as a mix of local framework shell and just in time application code (which is essentially what a web application is), and these applications could also take advantage of robust third party libraries that could also be utilized within web clients and JavaScript servers, which in turn reduces the overall threshold for the skills an application developer needs to maintain.

Additionally, the same things that make JavaScript advantageous in web and server development - closures, functions as objects, weak typing, dynamic declarative programming, event driven design - are perhaps even more appropriate for mobile devices and sensors than they are for the relatively robust world of web browsers.

Finally, an HTML5/SVG/CSS/JavaScript stack makes for a powerful virtual machine that can run on phones, tablets, traditional computers and most nearly any other device without significant re-engineering from one device to the next. The current architecture for Android gets close, but it still has problems with applications working inconsistently across devices due to low level considerations.

JavaScript as Services Language. Outside mobile, the next major area where JavaScript is set to explode is in the services arena. JSON has been steadily wearing away at the SOAP-based SOA stack for several years now, as well as the publish/subscribe world of RSS and Atom (both of which now have JSON profiles). Web workers and socket.js are reshaping point-to-point protocols. With JavaScript's increasing dominance in the NoSQL sphere, it's a natural language to handle the moderate bandwidth interchange that increasingingly controls the interactive, as opposed to the high speed communication protocols.

These are all reason why I suspect that JavaScript is likely to make its way into the enterprise as perhaps the primary language by decade's end, if not sooner. Most current metrics give JavaScript a fairly low overall position compared to others (Tiobe.com places it at #11), but this is primarily based upon server side usage in the enterprise, where it's use today is still fairly limited, and due to the fact that the two most popular languages right now - Objective C and Java - are there because of the explosion of mobile app development. A V8 engine built into a future version of Android would rocket the use of JavaScript to top tier fairly quickly. The growing trend towards "big data" solutions similarly currently favor Java, but as JavaScript (and node.js in particular) develops its own stack again I think that this language rather than Java will become as pervasive on the server as it is on the web client.

It's also becoming the common tongue that most developers have in their back pocket, because so much of application development requires knowing at least some web development skills. It may not necessarily be a favorite language, but common languages have a tendency to become dominant over time, and as JavaScript increasingly becomes a fixture on the server, it's very likely that it will eventually (and quickly) own the server.

Thus, when I talk to people entering into the information technologies field today about what languages they should be learning, I put JavaScript at the top of that list. Learn JavaScript and node.js, HTML5 and SVG and Canvas, Coffeescript (which is a very cool language that uses JavaScript as it's VM). Learn JavaScript the way that it's used today, as a language that's more evocative of Lisp and Haskell than of Java and C++. It's pervasive, and will only become more so.

Subscribe to:

Posts (Atom)